Studies of search habits reveal that people engage in many search tasks involving collaboration with others, such as travel planning, organizing social events, or working on a homework assignment. However, current Web search tools are designed for a single user, working alone. We introduce SearchTogether, a prototype that enables groups of remote users to synchronously or asynchronously collaborate when searching the Web. We describe an example usage scenario, and discuss the ways SearchTogether facilitates collaboration by supporting awareness, division of labor, and persistence. We then discuss the findings of our evaluation of SearchTogether, analyzing which aspects of its design enabled successful collaboration among study participants.

We present Sikuli, a visual approach to search and automation of graphical user interfaces using screenshots. Sikuli allows users to take a screenshot of a GUI element (such as a toolbar button, icon, or dialog box) and query a help system using the screenshot instead of the element's name. Sikuli also provides a visual scripting API for automating GUI interactions, using screenshot patterns to direct mouse and keyboard events. We report a web-based user study showing that searching by screenshot is easy to learn and faster to specify than keywords. We also demonstrate several automation tasks suitable for visual scripting, such as map navigation and bus tracking, and show how visual scripting can improve interactive help systems previously proposed in the literature.

To create collections, like music playlists from personal media libraries, users today typically do one of two things. They either manually select items one-by-one, which can be time consuming, or they use an example-based recommendation system to automatically generate a collection. While such automatic engines are convenient, they offer the user limited control over how items are selected. Based on prior research and our own observations of existing practices, we propose a semi-automatic interface for creating collections that combines automatic suggestions with manual refinement tools. Our system includes a keyword query interface for specifying high-level collection preferences (e.g., "some rock, no Madonna, lots of U2,") as well as three example-based collection refinement techniques: 1) a suggestion widget for adding new items in-place in the context of the collection; 2) a mechanism for exploring alternatives for one or more collection items; and 3) a two-pane linked interface that helps users browse their libraries based on any selected collection item. We demonstrate our approach with two applications. SongSelect helps users create music playlists, and PhotoSelect helps users select photos for sharing. Initial user feedback is positive and confirms the need for semi-automated tools that give users control over automatically created collections.

Internet usage on mobile devices continues to grow as users seek anytime, anywhere access to information. Because users frequently search for businesses, directory assistance has been the focus of many voice search applications utilizing speech as the primary input modality. Unfortunately, mobile settings often contain noise which degrades performance. As such, we present Search Vox, a mobile search interface that not only facilitates touch and text refinement whenever speech fails, but also allows users to assist the recognizer via text hints. Search Vox can also take advantage of any partial knowledge users may have about the business listing by letting them express their uncertainty in an intuitive way using verbal wildcards. In simulation experiments conducted on real voice search data, leveraging multimodal refinement resulted in a 28% relative reduction in error rate. Providing text hints along with the spoken utterance resulted in even greater relative reduction, with dramatic gains in recovery for each additional character.

Studies of search habits reveal that people engage in many search tasks involving collaboration with others, such as travel planning, organizing social events, or working on a homework assignment. However, current Web search tools are designed for a single user, working alone. We introduce SearchTogether, a prototype that enables groups of remote users to synchronously or asynchronously collaborate when searching the Web. We describe an example usage scenario, and discuss the ways SearchTogether facilitates collaboration by supporting awareness, division of labor, and persistence. We then discuss the findings of our evaluation of SearchTogether, analyzing which aspects of its design enabled successful collaboration among study participants.

We present three new interaction techniques for aiding users in collecting and organizing Web content. First, we demonstrate an interface for creating associations between websites, which facilitate the automatic retrieval of related content. Second, we present an authoring interface that allows users to quickly merge content from many different websites into a uniform and personalized representation, which we call a card. Finally, we introduce a novel search paradigm that leverages the relationships in a card to direct search queries to extract relevant content from multiple Web sources and fill a new series of cards instead of just returning a list of webpage URLs. Preliminary feedback from users is positive andvalidates our design.

Re-finding, a common Web task, is difficult when previously viewed information is modified, moved, or removed. For example, if a person finds a good result using the query "breast cancer treatments", she expects to be able to use the same query to locate the same result again. While re-finding could be supported by caching the original list, caching precludes the discovery of new information, such as, in this case, new treatment options. People often use search engines to simultaneously find and re-find information. The Re:Search Engine is designed to support both behaviors in dynamic environments like the Web by preserving only the memorable aspects of a result list. A study of result list memory shows that people forget a lot. The Re:Search Engine takes advantage of these memory lapses to include new results where old results have been forgotten.

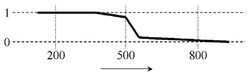

Directional faceted browsers, such as the popular column browser iTunes, let a person pick an instance from any column-facet to start their search for music. The expected effect is that any columns to the right are filtered. In keeping with this directional filtering from left to right, however, the unexpected effect is that the columns to the left of the click provide no information about the possible associations to the selected item. In iTunes, this means that any selection in the Album column on the right returns no information about either the Artists (immediate left) or Genres (leftmost) associated with the chosen album.

Backward Highlighting (BH) is our solution to this problem, which allows users to see and utilize, during search, associations in columns to the left of a selection in a directional column browser like iTunes. Unlike other possible solutions, this technique allows such browsers to keep direction in their filtering, and so provides users with the best of both directional and non-directional styles. As well as describing BH in detail, this paper presents the results of a formative user study, showing benefits for both information discovery and subsequent retention in memory.

Modern mobile phones can store a large amount of data, such as contacts, applications and music. However, it is difficult to access specific data items via existing mobile user interfaces. In this paper, we present Gesture Search, a tool that allows a user to quickly access various data items on a mobile phone by drawing gestures on its touch screen. Gesture Search contributes a unique way of combining gesture-based interaction and search for fast mobile data access. It also demonstrates a novel approach for coupling gestures with standard GUI interaction. A real world deployment with mobile phone users showed that Gesture Search enabled fast, easy access to mobile data in their day-to-day lives. Gesture Search has been released to public and is currently in use by hundreds of thousands of mobile users. It was rated positively by users, with a mean of 4.5 out of 5 for over 5000 ratings.

Studies of search habits reveal that people engage in many search tasks involving collaboration with others, such as travel planning, organizing social events, or working on a homework assignment. However, current Web search tools are designed for a single user, working alone. We introduce SearchTogether, a prototype that enables groups of remote users to synchronously or asynchronously collaborate when searching the Web. We describe an example usage scenario, and discuss the ways SearchTogether facilitates collaboration by supporting awareness, division of labor, and persistence. We then discuss the findings of our evaluation of SearchTogether, analyzing which aspects of its design enabled successful collaboration among study participants.

Programmers regularly use search as part of the development process, attempting to identify an appropriate API for a problem, seeking more information about an API, and seeking samples that show how to use an API. However, neither general-purpose search engines nor existing code search engines currently fit their needs, in large part because the information programmers need is distributed across many pages. We present Assieme, a Web search interface that effectively supports common programming search tasks by combining information from Web-accessible Java Archive (JAR) files, API documentation, and pages that include explanatory text and sample code. Assieme uses a novel approach to finding and resolving implicit references to Java packages, types, and members within sample code on the Web. In a study of programmers performing searches related to common programming tasks, we show that programmers obtain better solutions, using fewer queries, in the same amount of time spent using a general Web search interface.